AI art is booming, but with it comes a whirlwind of questions about ownership, responsibility, and oversight. Who really owns an AI-generated masterpiece? The person who crafted the prompt? The company behind the algorithm? Or no one at all? These questions are more than theoretical, they’re heavily influencing creative industries and sparking debates among artists, technologists, and legal experts. Let’s dive into the tangled web of AI ownership and oversight, where creativity meets complexity.

Who Owns AI Art?

The question of ownership over AI-generated art has taken center stage in recent months, following a series of influential legal rulings. Most notably, in March 2025, a U.S. federal appellate court reaffirmed that artwork created independently by artificial intelligence cannot be copyrighted. This decision upheld the longstanding rule requiring human authorship for copyright eligibility. However, the court clarified that works involving significant human input (such as editing or guiding AI tools) can qualify for copyright protection, provided the human contribution is substantial and creative.

Globally, the situation remains inconsistent. The European Union’s forthcoming AI Act emphasizes transparency and accountability, requiring generative AI platforms to disclose their use of copyrighted training data. While this doesn’t directly address copyright for outputs, it signals a growing push for clearer regulations. Meanwhile, in countries like Japan and India, discussions around AI copyright are still emerging, leaving creators in legal limbo.

So, who owns AI-generated art? That’s still a big question, but here’s another one: who’s responsible when AI companies train their models on copyrighted material without permission? This is a topic I covered a few months ago. While the legal debates rage on, the behavior of these corporations seems less ambiguous. They argue that scraping copyrighted works off the internet to train AI systems is “fair use.” ChatGPT will tell you all about it, too. But let’s be honest here: this looks more like ignoring copyright law for profit than innovation.1

Oversight in Practice: Prompting as a Creative Act

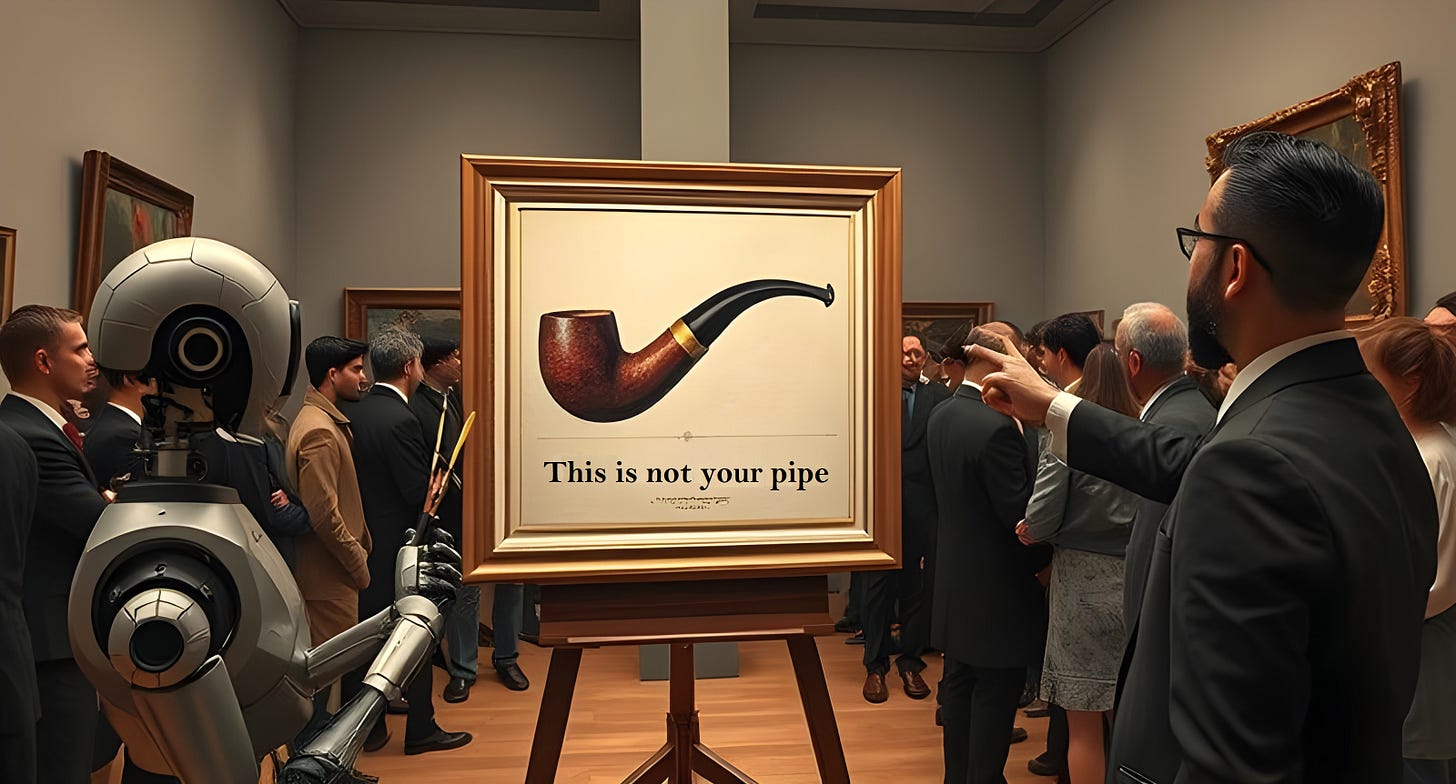

Crafting prompts is often described as a new form of artistry, requiring skill and imagination to coax desired outputs from an AI system. But is prompting enough to claim ownership? Critics argue that prompts are more like instructions than creative expressions, making them difficult to classify as intellectual property. Others see prompting as akin to directing a film—where the director doesn’t physically create every scene but still holds creative control.

This debate touches on broader cultural questions about authorship. As Prompting Culture often highlights, prompts can shape narratives and aesthetics in profound ways. But does shaping equal creating? And if not, who should oversee how these tools are used?

The Problem of Oversight: Who’s Really Responsible?

When AI tools go wrong, producing bad output that’s derivative, offensive, or even illegal, the question of responsibility often gets passed around like a hot potato. But let’s not overcomplicate things: the blame lies squarely with those who design and deploy these systems. Developers choose to train their models on biased or unlicensed data, knowing full well the risks. Claiming ignorance or hiding behind the idea that “AI is just a tool” doesn’t cut it.

Whether it’s societal biases baked into training datasets or blatant exploitation of copyrighted works, these issues aren’t accidents. They’re predictable outcomes of decisions made by corporations prioritizing speed and profit over ethics. Until developers are held accountable for the systems they create, creators and consumers alike will continue to bear the brunt of these failures.

Toward Ethical Ownership

To create a sense of trust and accountability in AI artistry, here are some steps people sometimes suggest looking into: transparency, fair compensation, or even collaborative ownership models.

However, the idea of “fixing” AI copyright issues with transparency or compensation schemes sounds great in theory but crumbles under scrutiny. Labeling hybrid human/AI creations? That’s a logistical nightmare.2 Compensating artists for training data? Nice idea, but how do you fairly pay thousands of contributors whose work was scraped without consent?

Here’s a simpler, more realistic approach: enforce existing copyright laws. If an AI system uses copyrighted material without permission, the companies behind it should be held accountable, just like any other entity that violates intellectual property rights. This isn’t about reinventing the wheel; it’s about applying the rules we already have.

Beyond that, governments need to step up with clear regulations that prevent exploitation while still allowing innovation. It’s not perfect, but it’s a start, and far more practical than vague promises of “fair compensation” or utopian collaboration models.

Conclusion

Creativity isn’t just about the tools we have, but also about how we use them responsibly. But let’s be clear: responsibility doesn’t mean vague ideas of “collaboration” or treating AI as a neutral partner. It means holding tech corporations accountable when they exploit artists’ work for profit under flimsy claims of “fair use.”

Ownership and oversight in AI artistry aren’t just legal challenges, they’re cultural ones. And the fight for ethical AI isn’t just about protecting creators. It’s about ensuring that innovation doesn’t come at the cost of exploitation. If you care about fairness in the creative process, demand stronger enforcement of copyright laws and push for transparency from AI developers. Creativity deserves better than being reduced to a free-for-all for those with the deepest pockets.

The logic goes something like this: training AI models requires massive datasets, and licensing all that material would be “too expensive” and “stifle innovation.” Convenient, right? But copyright law doesn’t bend just because something is hard or costly. Using copyrighted works without permission to create commercial products is generally not allowed, and for good reason. Until governments step in with meaningful enforcement, creators will continue having to watch their creations being fed to the machine while tech giants rake in billions.

Prompting Culture currently does not label any content as AI-assisted. One exception is the fiction section, which usually gives a basic explanation of the tools and prompts used.

AI copyright issues are tough to deal with. The training data should ALL be paid for and the original artist or writer should be compensated for the use of their work or it should not be used for training purposes. As far as copyright for AI generated work, another difficult and potentially expensive route is that both the person who created the queries and the AI software company should jointly own the copyright. Some sort of contract with money changing hands needs to happen before anyone can claim copyright or make money from the transaction.

Messy and hard, yup, but it forces companies and individuals to truly take ownership including the financial burden that goes with, especially if money is charged for the resulting art.

You are absolutely right: tighten the existing copyright laws to minimize exploitation.