Enshittification (n.):

The process by which a technology, platform, or system that was once innovative, useful, and enjoyable becomes increasingly frustrating, annoying, and manipulative over time, often due to the prioritization of profit, growth, and user engagement over user experience and well-being.

The term "enshittification" has been in use for many years, but came to prominence in 2022 when it was used by Cory Doctorow, a science fiction author and tech critic, to describe the process by which a technology or platform that was once innovative and exciting gradually becomes worse and more frustrating to use over time. It's like when you buy a new pair of shoes that are super comfortable and stylish, but after a few months, they start to fall apart and become uncomfortable to wear.

Doctorow argues that enshittification is a natural consequence of the way that companies prioritize growth and profit over user experience. When a company is small and scrappy, it's often focused on creating a great product that users will love. But as it grows and becomes more successful, it starts to prioritize other things, like making money and expanding its user base. And that's when the enshittification process begins.

Now, I know what you're thinking - "Isn't this just a fancy way of saying that things get worse over time?" And yeah, that's part of it. But enshittification is more than just a natural decline - it's a deliberate process that's driven by the priorities of the companies that create these technologies.

Take Facebook, for example. This may sound like a joke to teenagers nowadays, but when it first launched, Facebook was a cool new way for young people to connect with each other online. It was also simple, easy to use, and actually kind of fun. But over time, Facebook has become a bloated, confusing mess of a platform that's more focused on making money than on providing a good user experience. And that's a classic example of enshittification in action.

So, as a little case study, we're going to take a closer look at the enshittification of Facebook, and explore some of the ways in which it's happened. We'll talk about the design decisions that have contributed to Facebook's decline, and the ways in which the company's priorities have shifted over time. And we'll also explore some of the implications of enshittification for our society and culture.

The Good Old Days

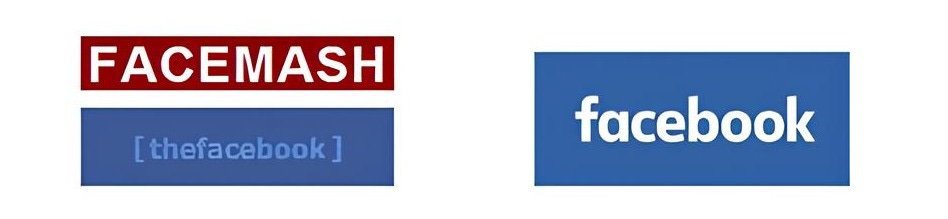

Facebook's early days were a wild ride. It was originally called Thefacebook and was launched in 2004 by Mark Zuckerberg and his Harvard college roommates.

A year before that, Zuckerberg’s first try at social networking was a solo project called Facemash, a deranged and misogynistic website which asked visitors to rate female students based on attractiveness, without their consent and based on pirated photos. Not cool. By contrast, Thefacebook was more like the platform as we now know it.

The platform quickly gained popularity as a way for college students to connect with each other online. It was simple, easy to use, and actually kind of fun. One of the things that set Facebook apart from other social media platforms at the time was its focus on real-world connections. You had to use your real name, and you had to be friends with people you actually knew in real life. Some found that creepy. To others, it made Facebook feel more like a community than a random collection of strangers.

As Facebook grew, it started to add more features and functionality. You could share photos and updates with your friends, join groups based on your interests, and even play games with your friends online. It was a really cool way to stay connected with people, and it quickly became one of the most popular websites on the internet.

But even back then, there were some warning signs that Facebook might not always be the best thing for its users. For example, the company's early motto was "Move fast and break things", which was meant to encourage innovation and experimentation. But it also meant that Facebook was willing to push out new features and updates without always thinking through the consequences.

One of the most notable examples of this was the introduction of the News Feed in 2006. The News Feed was a way for Facebook to show you updates from your friends in a single stream, rather than having to visit each of their profiles individually. It was a great idea, but it also meant that Facebook was now controlling what you saw and when you saw it. This was a big deal, because it meant that Facebook was now in a position to manipulate what you saw and when you saw it. And as we'll see later, this was just the beginning of Facebook's efforts to control and manipulate its users.

Ad Money

As Facebook continued to grow, it started to make some changes that would ultimately contribute to its enshittification. One of the biggest changes was the introduction of ads. Now, I know what you're thinking - "Ads are just a normal part of the internet, right?" And yeah, that's true. But the way that Facebook implemented ads was different. Facebook's ads were designed to be super targeted, using all sorts of data about you to figure out what you might be interested in. This was important, because it meant that Facebook was now collecting and using all sorts of personal data about its users. And as we'll see later, this was just the beginning of Facebook's data collection efforts.

But the ads themselves were also a problem. They were often annoying and intrusive, popping up in your News Feed and interrupting your browsing experience. And because Facebook was using all sorts of data to target the ads, they often felt creepy and invasive.

This was a classic example of a "dark pattern," which is a design technique that's intended to manipulate users into doing something they might not want to do. In this case, the dark pattern was the use of targeted ads to get people to click on things they didn't really want to click on.

As Facebook continued to prioritize ads and data collection, it started to make other changes that would contribute to its enshittification. For example, it started to prioritize content from brands and publishers over content from your friends and family. This meant that your News Feed was now filled with all sorts of stuff you didn't really care about, rather than updates from the people you actually knew.

And then there was the algorithm. Facebook's algorithm was designed to show you the most relevant and engaging content, but it often ended up showing you stuff that was just plain annoying. Like, remember when Facebook started showing you all those "On This Day" posts? Yeah, those were super annoying.

The algorithm was also designed to keep you engaged, by showing you content that would keep you scrolling and clicking. This was a classic example of the "attention economy," which is the idea that companies will do whatever it takes to get and keep your attention. And as we'll see later, this was just the beginning of Facebook's efforts to manipulate its users.

Shittier and Shittier

So, let's talk about the feedback loop that's at the heart of Facebook's enshittification. A feedback loop is when a system or process creates a cycle of cause and effect that reinforces itself. In the case of Facebook, the feedback loop is all about attention and engagement.

Here's how it works: Facebook's algorithm is designed to show you content that will keep you engaged and scrolling. This means that it prioritizes content that's likely to get a lot of likes, comments, and shares. But this also means that Facebook is creating a cycle where the most attention-grabbing content is the most likely to be seen. This creates a problem, because the most attention-grabbing content is often not the most valuable or meaningful content. Instead, it's often the most sensational or provocative content that gets the most attention. And this is exactly what Facebook's algorithm is designed to prioritize.

So, what happens is that Facebook's algorithm creates a cycle where the most attention-grabbing content is the most likely to be seen, which in turn creates more attention-grabbing content, and so on. This is one feedback loop that's at the heart of Facebook's enshittification.

One of the most insidious aspects of Facebook's feedback loop is the way it uses surveillance capitalism to fuel its growth. Surveillance capitalism is a term coined by Shoshana Zuboff to describe the way that companies like Facebook use data collection and analysis to create highly targeted and manipulative advertising.

Facebook's business model is built on the idea of collecting as much data as possible about its users, and then using that data to sell targeted ads to advertisers. This creates a feedback loop where Facebook is incentivized to collect more and more data, and to use that data to create more and more targeted ads.

But here's the thing: this feedback loop isn't just about making money. It's also about creating a system that's designed to manipulate users into doing what Facebook wants them to do. And that's exactly what's happening.

Facebook's algorithm is designed to show you content that will keep you engaged and scrolling, and to use that engagement to sell more ads. But this also means that Facebook is creating a system that's designed to keep you hooked, even if it's not good for you.

And that's where the concept of "variable rewards" comes in. Variable rewards are a type of psychological manipulation that's used to keep people engaged and hooked. The idea is that you're given a reward, but you don't know when or how often you'll get it. This creates a sense of anticipation and excitement, and keeps you coming back for more.

Facebook's algorithm uses variable rewards to keep you engaged, by showing you content that's likely to get a reaction from you. But this also means that Facebook is creating a system that's designed to manipulate your emotions, and to keep you hooked even if it's not good for you. And that's exactly what's happening. Facebook's feedback loop is creating a system that's designed to manipulate users into doing what Facebook wants them to do, rather than what's good for them. And that's a problem that's not just limited to Facebook, but to the entire internet.

The Bigger Picture

So, what's the big deal about Facebook's enshittification? Why should we care that a social media platform is becoming increasingly annoying and manipulative?

First of all, Facebook's enshittification is a symptom of a larger problem with the way that technology is designed and used in our society. The attention economy, which is the idea that companies will do whatever it takes to capture and hold our attention, is a major contributor to this problem.

But it's not just about attention. It's also about the way that technology is used to manipulate and control us. Surveillance capitalism, which is the practice of collecting and using data to create highly targeted and manipulative advertising, is a major part of this problem.

And then there's the impact on our mental and emotional well-being. Facebook's enshittification is not just annoying, it's also contributing to a decline in our mental and emotional health. The constant stream of notifications, the pressure to present a perfect online persona, and the fear of missing out (FOMO) are all taking a toll on our well-being.

But it's not just about individual well-being. Facebook's enshittification is also contributing to a decline in civic discourse and social cohesion. The algorithm-driven news feed, which prioritizes sensational and provocative content, is creating a culture of outrage and division.

So, what can we do about it?

Well, for one thing, we can start by being more mindful of how we use technology. We can take steps to limit our screen time, to use social media in a more intentional way, and to prioritize face-to-face interactions.

We can also demand more from the companies that create and control our technology. We can demand that they prioritize our well-being and safety, rather than just their profits.

And finally, we can start to imagine a different kind of technology, one that is designed to promote our well-being and social cohesion, rather than just to capture and hold our attention.

Did you know that not all social networking platforms are run by for-profit companies? Instead of Facebook and Twitter, users may want to look at more user-focused initiatives like Bluesky, Mastodon or even Diaspora.

This is a really interesting read, despite technology not being my thing (not due to a lack of interest, more due to a lack of understanding).

I would say most MS products like Word and Excel as well as practically every software product over 10 years old fall into this trap. Upgrades do not necessarily make it better to use.